Generative Deep Learning for Molecular SMILES Generation

A comparative study of graph‐to‐sequence models using GNNs, Graphormer, and Birdie training for chemically valid molecule design.

Introduction

This experiment investigates advanced graph-to-sequence deep learning approaches for molecular design, focusing on generating chemically valid SMILES strings from molecular graphs. We compare:

- GNN→Transformer: A baseline message-passing GNN encoder with a Transformer decoder.

- Graphormer→Transformer: A structurally-biased Transformer encoder with explicit centrality, spatial, and edge encodings.

- Graphormer + Birdie→Transformer: The Graphormer model further trained with stochastic, corruption-based objectives (infilling, deshuffling, selective copying, standard copying) to improve robustness.

All models are trained with a combined cross-entropy and REINFORCE‐style validity loss, rewarding chemically valid outputs via RDKit validation.

Background and Motivation

Discovering novel drug‐like molecules traditionally requires expensive, manual exploration of vast chemical spaces. Language‐modeling techniques applied to SMILES can generate syntactically valid strings but often miss underlying graph topology, producing chemically invalid compounds. Bridging this gap demands encoder–decoder architectures that respect molecular graphs’ structure while leveraging powerful sequence modeling.

Graph-Based Generative Models

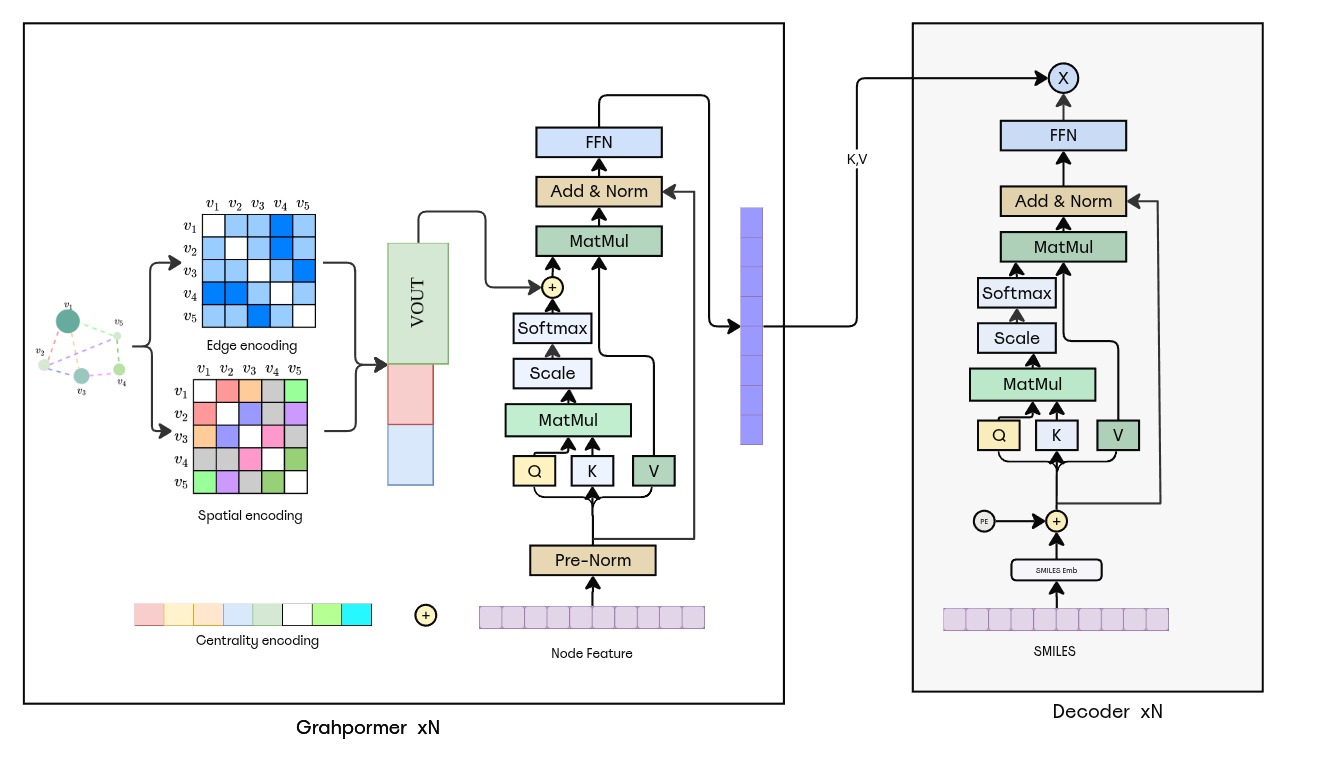

Graph Neural Networks (GNNs) perform local message passing over nodes and edges, capturing atomic interactions but struggle with long-range dependencies and global structure (over‐smoothing). Graphormer extends Transformers to graphs by injecting:

- Centrality encodings (node degrees)

- Spatial encodings (shortest‐path distances)

- Edge encodings (bond‐type biases along paths)

- Virtual node (

[VNode]) for global readout This combination enables rich, topology-aware representations.

Problem Statement

- Representation mismatch: Linear SMILES fail to capture graph topology.

- Validity vs. fluency: Models optimized for token‐level accuracy often generate chemically invalid molecules.

- Robustness: Decoders can struggle with incomplete or noisy inputs, limiting diversity and generalization.

Research Objectives

- Effective graph encoding: Compare standard GNN vs. Graphormer encoders.

- SMILES accuracy & validity: Evaluate token-level accuracy and RDKit‐verified validity.

- Robustness via self-supervision: Apply Birdie training objectives to improve long-range dependency modeling.

- Comprehensive benchmarking: Quantitatively assess all variants on accuracy, validity, diversity, and noise resilience.

Contributions

- Architectural comparison of three encoder–decoder variants (GNN, Graphormer, Graphormer+Birdie).

- Structural inductive biases: Integration of centrality, spatial, and edge encodings into attention.

- Validity‐aware loss: A REINFORCE‐style auxiliary loss rewarding chemically valid outputs.

- Birdie multi-task pretraining: Randomized infilling, deshuffling, selective copying, and autoencoding objectives.

- Full PyTorch pipeline: From SDF parsing and feature extraction to batched training and inference.

Data Preparation

We processed a dataset of ~100K molecules from ChEMBL, ensuring chemical diversity and drug-like properties:

- Molecule parsing: SDF files loaded via RDKit, extracting atom/bond features and canonical SMILES.

-

Feature extraction:

- Node features: One-hot atom type, degree, formal charge, hybridization, aromaticity

- Edge features: One-hot bond type, conjugation, ring membership

- Graph construction: Bi-directional adjacency with feature tensors for nodes and edges.

- Dataset splits: 80% train, 10% validation, 10% test; stratified by molecular weight distribution.

-

Statistics:

- Avg. atoms per molecule: 23 ± 8

- SMILES length: 50 ± 15 tokens

- Sequence tokenization: Character-level SMILES with special tokens

<sos>,<eos>,<pad>. -

Padding & batching:

- Node features padded to max 50 nodes per batch

- SMILES sequences padded to max length per batch

- Data augmentation (Birdie only): Random masking/shuffling of graph chunks and SMILES segments during pretraining.

Data processing from SDF → SMILES & Tokenization

#parsing SMILES with RDKit

from rdkit import Chem

smiles = Chem.MolToSmiles(molecule, canonical=True)

# SMILES

SMILES: "CC(=O)O"

#tokens

Tokens: ["<sos>", "C", "C", "(", "=", "O", ")", "O", "<eos>"]

# decoder input and labes (Simple pretraining)

dec_input_ids = ["<sos>","C", "C", "(", "=", "O",")", "O"]

labels = ["C", "C", "(", "=", "O", ")", "O", "<eos>"]

Model Architectures

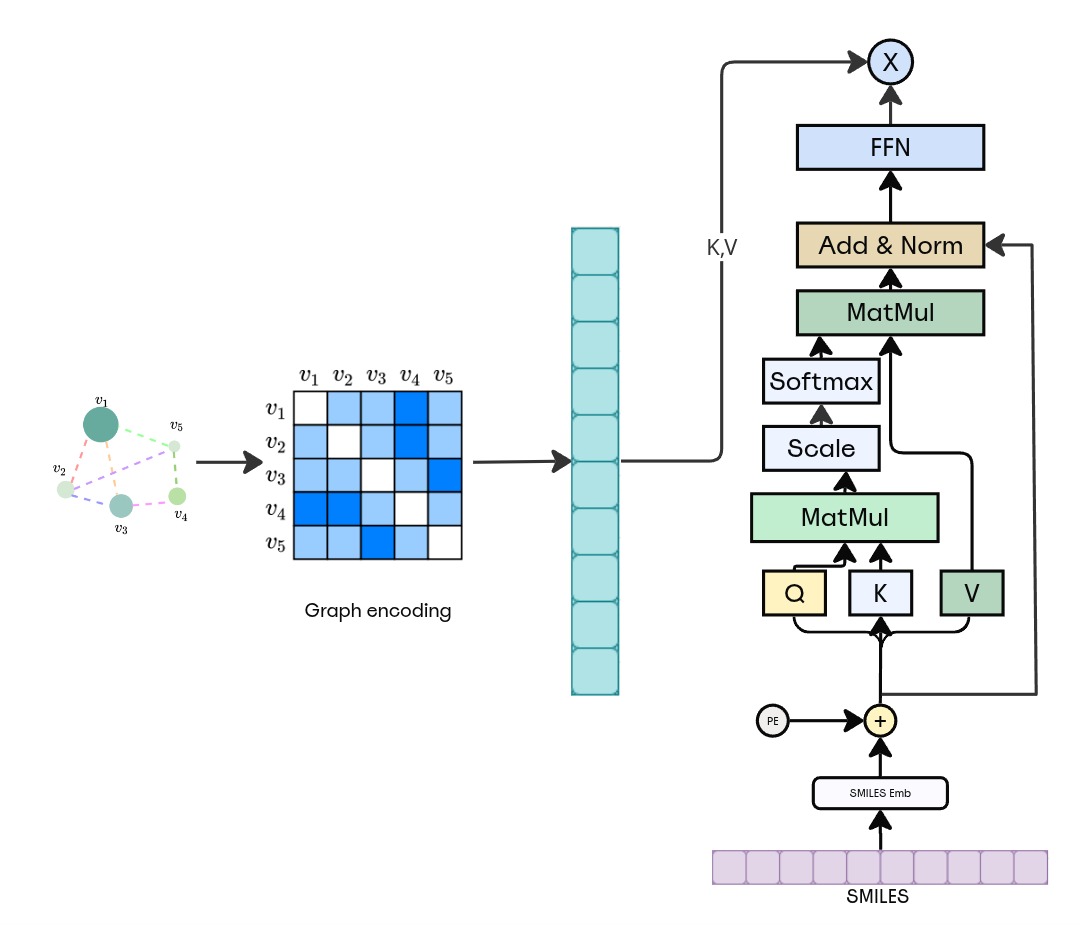

GNN Encoder → Transformer Decoder

Figure: GNN→Transformer architecture. Input molecular graph is embedded via an MPNN (3 layers, hidden=128), global readout pools to a 512-d vector, then passed to an 8-layer causal Transformer decoder (d_model=512, heads=8) for SMILES generation.

Graphormer Encoder → Transformer Decoder

Figure: Graphormer→Transformer. Graphormer encoder integrates centrality, spatial, and edge biases in self-attention across 6 layers (d_model=512). The pooled [VNode] token seeds the Transformer decoder similarly configured to the baseline.

Graphormer + Birdie → Transformer Decoder

Birdie Graphormer→Transformer. Same as Graphormer, with stochastic Birdie objectives applied per batch to encoder inputs and SMILES targets (infilling, deshuffling, selective copying).

Experiments & Results

- Training: Batch size 16, 50 epochs, Adam lr=5e-6, early stopping (patience=5).

-

Metrics:

- Final total loss: GNN=1.1, Graphormer=0.4, Graphormer+Birdie=0.3.

- Chemical validity (greedy decoding): 81.7%, 91.3%, 92.0% respectively.

Loss and validity comparisons

| Model | Final Total Loss | Chemical Validity (%) |

|---|---|---|

| GNN → Transformer | 1.10 | 81.7 |

| Graphormer → Transformer | 0.40 | 91.3 |

| Graphormer + Birdie → Transformer | 0.30 | 92.0 |

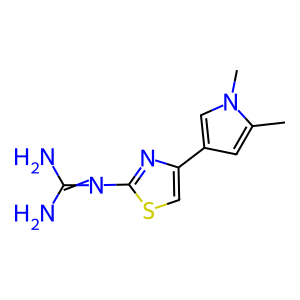

**Sample molecules from Graphormer+Birdie model **

Findings:

- Graphormer’s structural biases drive large gains over GNNs.

- Birdie corruption objectives further boost robustness and validity.

- Ablations confirm spatial encoding and validity loss are most critical.

Conclusion

Combining graph-aware self-attention with corruption-based pretraining substantially improves molecular SMILES generation. The Graphormer encoder captures global topology effectively, and Birdie objectives reinforce decoder robustness—achieving a 92% chemical validity rate and strong convergence behavior. citeturn0file0

Future Work

- Multi-objective training: Incorporate property‐driven rewards (e.g., logP, drug-likeness).

- Scale-up: Pretrain on larger, diverse chemical libraries.

- Conditional generation: Guide molecule design toward target functionalities.

- Hybrid representations: Explore SELFIES or graph‐based decoders. citeturn0file0

Citations

- Basnet, S. (2025). Generative Deep Learning Experiment in Molecule Generation (Report).

- Ying, R. et al. (2021). Do Transformers Really Perform Bad for Graph Representation? (Graphormer).

- Hu, W. et al. (2020). Strategies for Pre‐training Graph Neural Networks.

- Vaswani, A. et al. (2017). Attention Is All You Need.

- Krenn, M. et al. (2020). SELFIES: a robust molecular string representation.

- Kipf, T. & Welling, M. (2017). Semi‐Supervised Classification with Graph Convolutional Networks.

- RDKit: Open‐source cheminformatics; https://www.rdkit.org